DevOps is the union of people, process, and products to enable continuous delivery of value to our end users.

– Donovan Brown

Build Status

|

BackEnd Web API

|

FrontEnd Razor Page App |

How often have you gone to a GitHub repo and found that the sample code is not up to date with the latest SDK, or doesn't even build?

Over the last couple of weeks, I have jumped into updating (again) the ASP.NET Core Workshop the team uses for 1 and 2-day sessions at various conferences to .NET Core 2.1. However, as a part of that, we wanted to make sure that any future changes were checked and didn't just work on my machine.

The workshop has a lot in it, each with their own restore/save points and for a bonus, there is an Angular SPA Services options as well as Docker option too.

- Session #1 | Get bits installed, build the back-end application with basic EF model

- Session #2 | Build out back-end, extract EF model

- Session #3 | Add front-end, render agenda, set up front-end models

- Session #4 | Add authentication, add admin policy, allow editing sessions, users can sign-in with Twitter and Google, custom auth tag helper

- Session #5 | Add user association and personal agenda

- Session #6 | Deployment, Azure and other production environments, configuring environments, diagnostics |

- Session #7 | Challenges

- Session #8 | SPA front-end

Updating the code

Migrating from 2.0 -> 2.1 is not overly hard as documented in the blog post when it was released. It's more about not missing one of the *csproj files, or NuGet packages etc., and making sure that each project can do a dotnet restore, dotnet build. Of course, if those checks pass, dotnet publish where appropriate and there is the Docker thing too...

Visual Studio Team System

VSTS is awesome, more than I thought. I have used Travis and Circle CI in the past on my open source projects and I'm familiar with Jenkins. Looking at what I needed to accomplish, this was the right tool for the job.

Building the pipeline

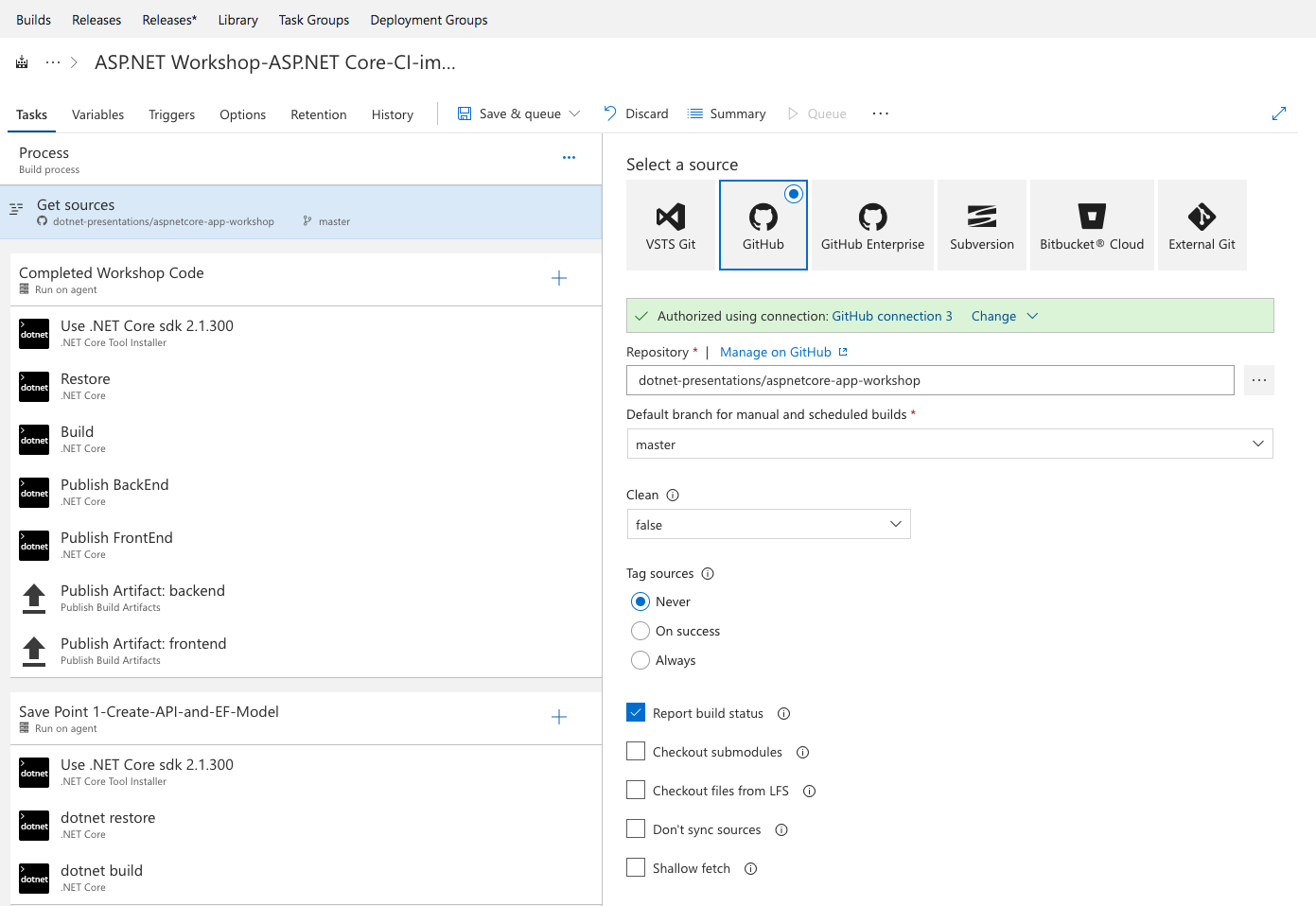

First I just need to get the code from the repo by using the oAuth connector from GitHub.

Next, it was pretty easy to add each of the steps for building the app in every save point. I accomplished that by creating a "phase" for each.

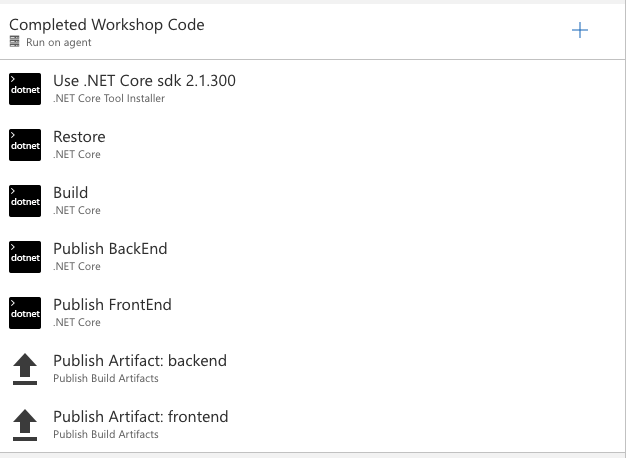

Here the first phase builds the code in the /src folder, which is the completed workshop, and produces two artifacts; backend.zip (Web API app) and frontend.zip (Razor Pages app)

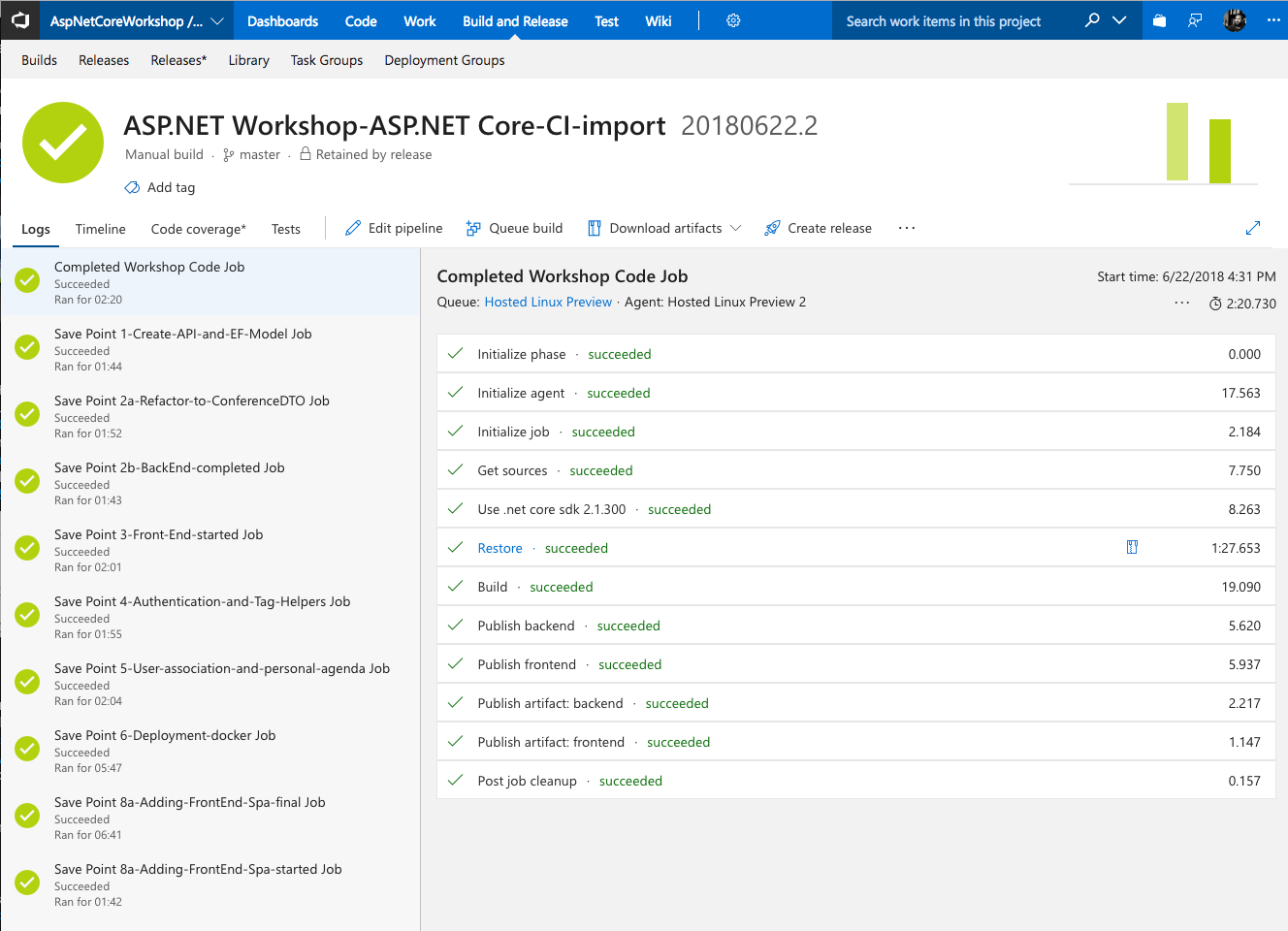

Multiple "phases" are built and when the build is initiated, either manually or via trigger (PR or merge), they will can in parallel, sequentially or both depending on the build agent availability and/or dependencies between phases.

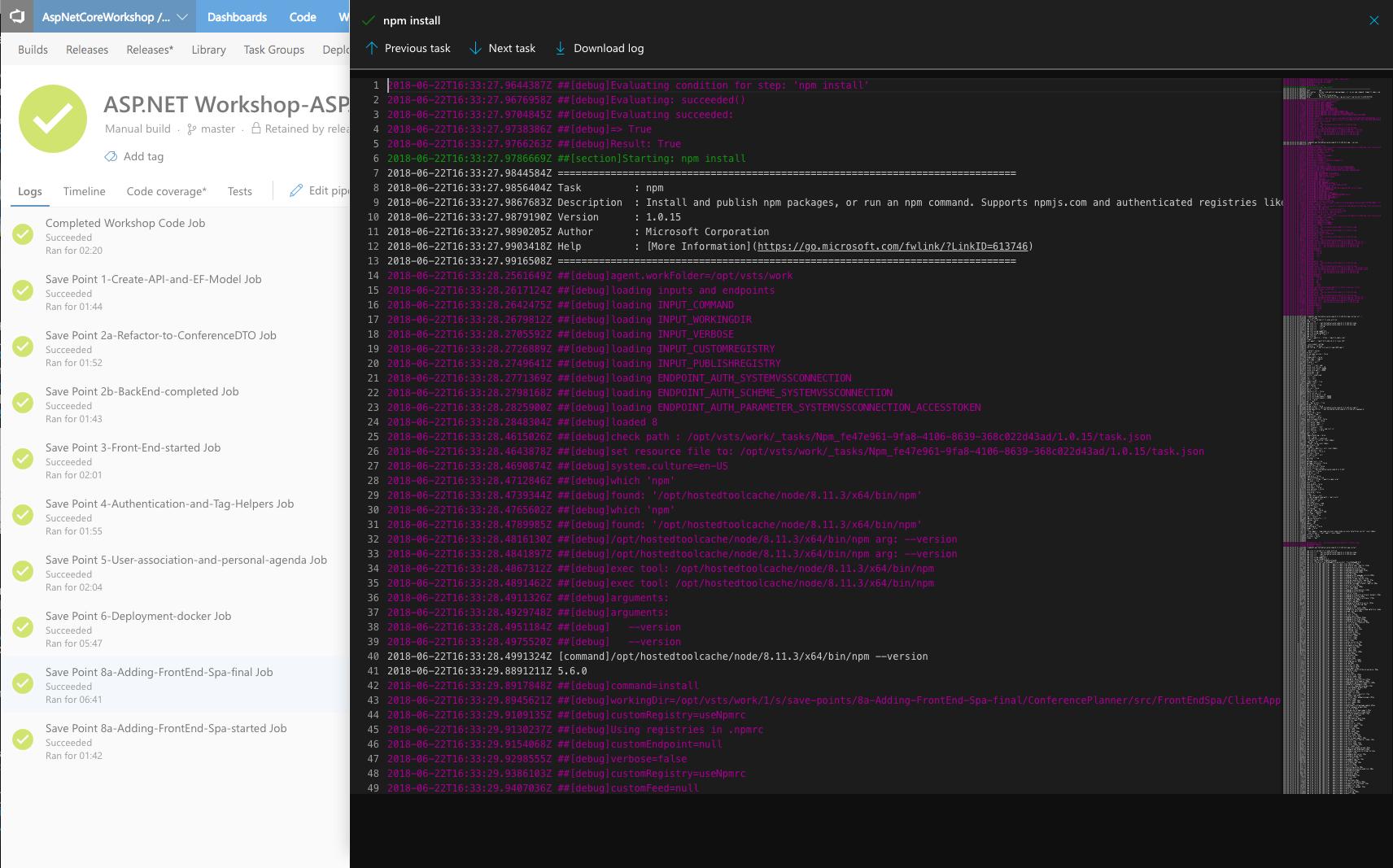

This screenshot shows all of the phases completed and a summary of each of the steps for the "Completed Workshop" phase or job.

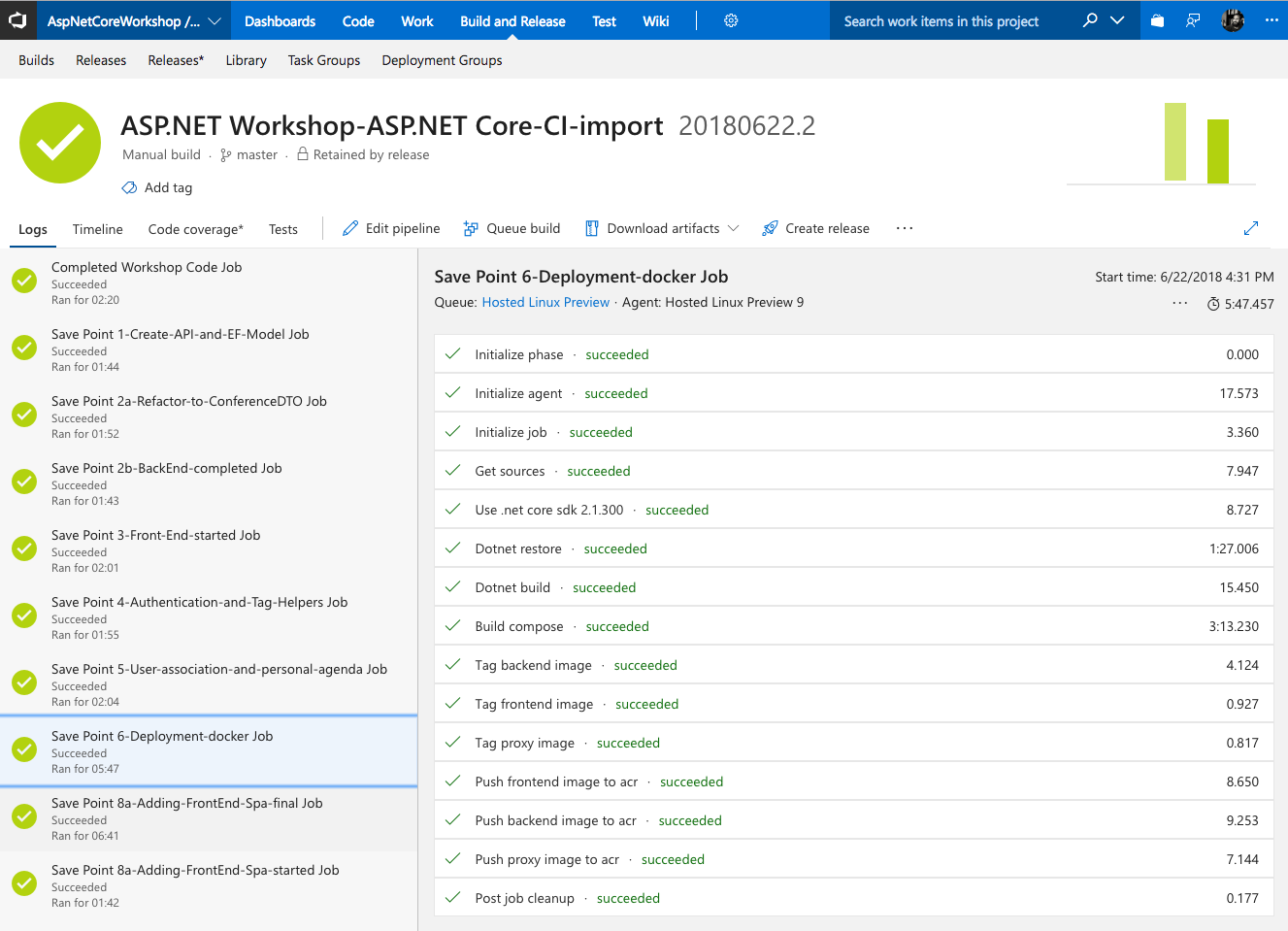

Here is the summary of the steps of the Docker option.

Clicking on any of the individual steps within the phase shows detailed logs of what occurred.

Releases

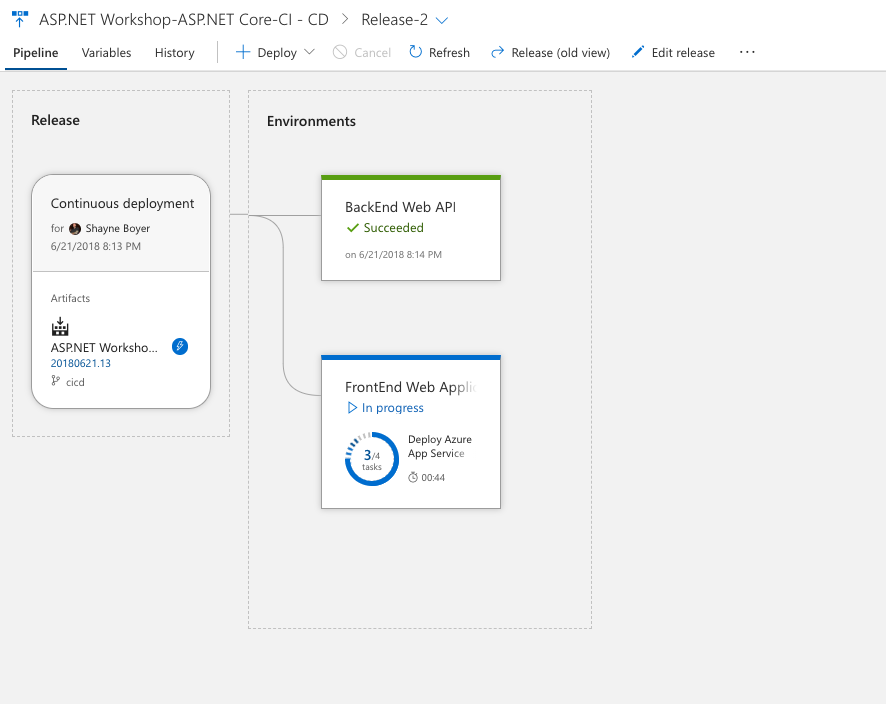

When the build completes successfully, the artifacts from the first phase backend.zip and frontend.zip are available as triggers for a release to occur. Here we've set up a release so whenever a successful build is completed and new artifacts are available; the backend.zip and frontend.zip are each deployed to an Azure App Service via web deploy.

Other releases

As a part of the build process, in the "deployment-docker" build phase we are creating a number of Docker images. frontend, backend and a nginx proxy image which are pushed to an Azure Container Registry (ACR).

A multi-container Azure App Service in Linux has a webhook that is notified when these images are updated and pulls them to this new service.

Custom agents, Docker, and more goodness

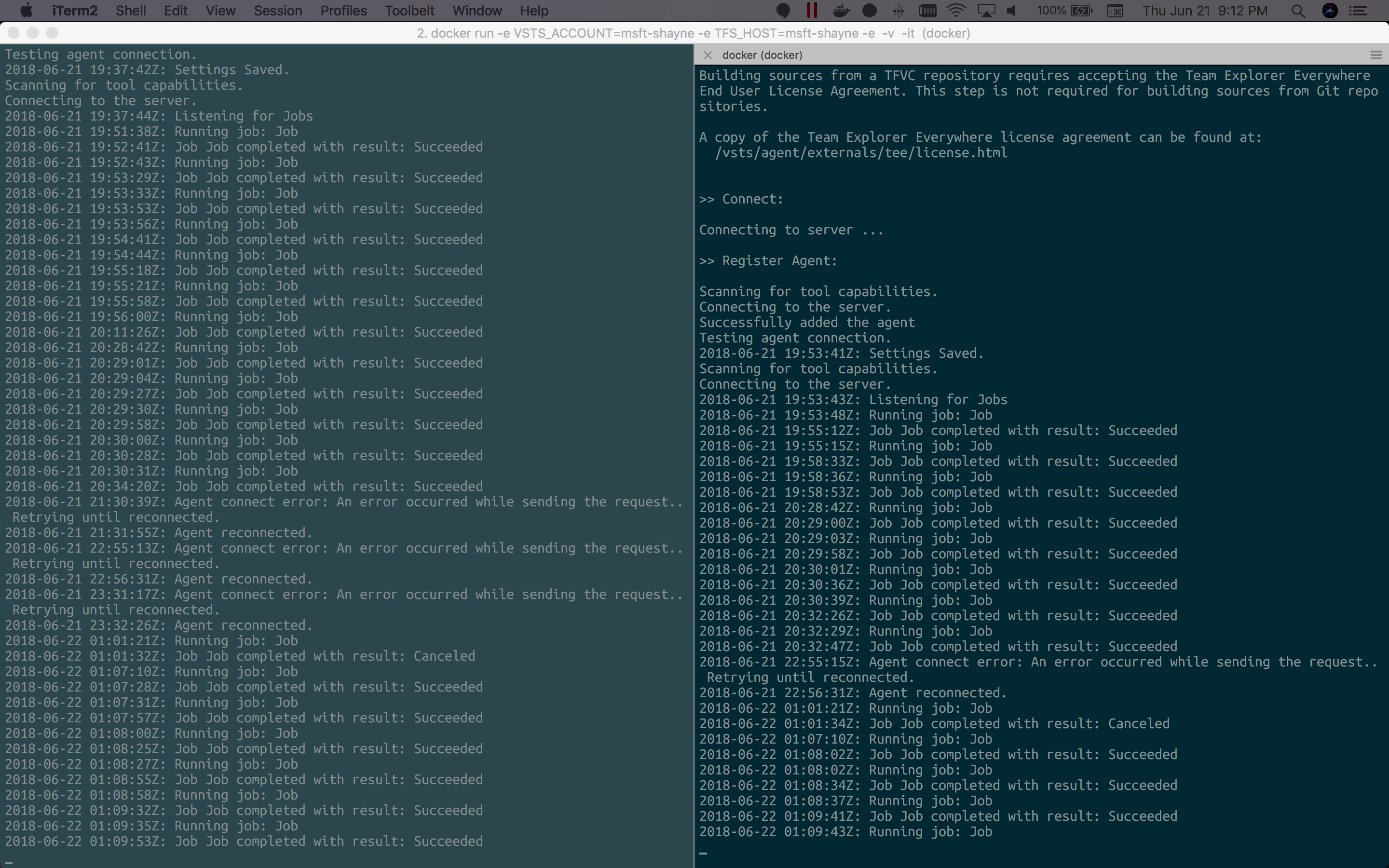

Each build pipeline and/or phase can be configured to have a specific build agent. This is what hosts the VSTS agent to perform the build. In the workshop build, we've set it up to build on a Linux Host. However, we could've selected Windows, Mac OSX or configured our own agent.

Using a Docker VSTS Agent

The VSTS Team publishes many different options for running the vsts-agent inside of Docker. See the repo on Docker Hub

You will need a Personal Access token from your Visual Studio Team Services instance.

I used the microsoft/vsts-agent:ubuntu-16.04-tfs-2017-u1-docker-17.03.0-ce-standard image as it included the Docker Compose capabilities needed to produce and push the images to ACR.

Note that you cannot run "Docker in Docker", so we need to run docker tasks inside the agent-container by binding the docker sock from the host to the container.

docker run

-e VSTS_ACCOUNT=myinstance-shayne \ƒ

-e TFS_HOST=myinstance-shayne \

-e VSTS_TOKEN=xxxxxxxxxxxxxxxxceyeqvu3thm6rxdfjooo3tgquyu6qu26a \

-v /var/run/docker.sock:/var/run/docker.sock \

-it microsoft/vsts-agent:ubuntu-16.04-tfs-2017-u1-docker-17.03.0-ce-standard

Running the container downloads the vsts agents and attaches it to the build server as an available agent for builds. It was fun to experiment with this. I ran 4 on my Mac, started a few in Azure Container Service (this took a while to start, the images are 3 Gb) and set them for non-Docker build phases.

Resources

Getting started with VSTS is super easy; check out the docs example for .NET Core - Build your first CI/CD pipeline for .NET Core